Using Kubernetes with Multiple Containers for Initialization and Maintenance

Update 23.04.2018: Added demo and link to conference talk video/slides at the end of this article

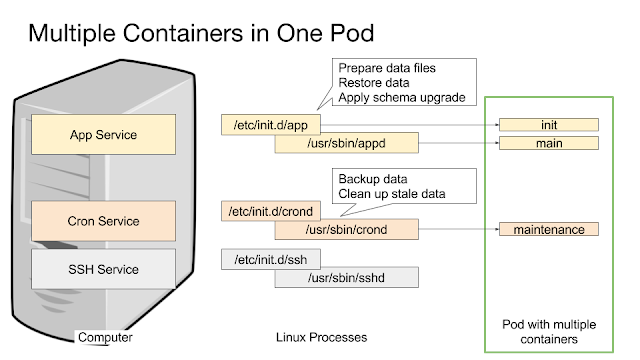

Kubernetes is a great way to run applications because it allows us to manage single Linux processes with a real cluster manager. A computer with multiple services is typically implemented as a pod with multiple containers sharing communication and storage:

Ideally every container runs only a single process. On Linux, most applications have three phases with two different programs or scripts:

- The initialization phase, typically an init script or a systemd unit file.

- The run phase, typically a binary or a script that runs a daemon.

- The maintenance phase, typically a script run as a CRON job.

While it is possible to put the initialization phase into a Docker container as part of the ENTRYPOINT script, that approach gives much less control over the entire process and makes it impossible to use different security contexts for each phase, e.g. to prevent the main application from directly accessing the backup storage.

Initialization Containers

Kubernetes offers initContainers to solve this problem: Regular containers that run before the main containers within the same pod. They can mount the data volumes of the main application container and "lay the ground" for the application. Furthermore they share the network configuration with the application container. Such an initContainer can also contain completely different software or use credentials not available to the main application.

Typical use cases for initContainers are

- Performing an automated restore from backup in case the data volume is empty (initial setup after a major outage) or contains corrupt data (automatically recover the last working version).

- Doing database schema updates and other data maintenance tasks that depend on the main application not running.

- Ensure that the application data is in a consistent and usable state, repairing it if necessary.

The same logic also applies to maintenance tasks that need to happen repeatedly during the run time of an application. Traditionally CRON jobs are used to schedule such tasks. Kubernetes does not (yet) offer a mechanism to start a container periodically on an existing pod. Kubernetes Cron Jobs are independent pods that cannot share data volumes with running pods.

One widespread solution is running a CRON daemon together with the application in a shared container. This not only brakes the Kubernetes concept but also adds a lot of complexity as now you also have to take care of managing multiple processes within one container.

Maintenance Containers

A more elegant solution is using a sidecar container that runs alongside the application container within the same pod. Like the initContainer, such a container shares the network environment and can also access data volumes from the pod. A typical application with init phase, run phase and maintenance phase looks like this on Kubernetes:

This example also shows an S3 bucket that is used for backups. The initContainer has exclusive access before the main application starts. It checks the data volume and restores data from backup if needed. Then both the main application container and the maintenance container are started and run in parallel. The maintenance container waits for the appropriate time and performs its maintenance task. Then it again waits for the next maintenance time and so on.

Simple CRON In Bash

The maintenance container can now contain a CRON daemon (for example Alpine Linux ships with dcron) that runs one or several jobs. If you have just a single job that needs to run once a day you can also get by with this simple Bash script. It takes the maintenance time in the

RUNAT environment variable.

All that also holds true for other Docker cluster environments like Docker Swarm mode. However you package your software, Kubernetes offers a great way to simplify our Linux servers by dealing directly with the relevant Linux processes from a cluster perspective. Everything else of a traditional Linux system is now obsolete for applications running on Kubernetes or other Docker environments.

Demo

In https://gist.github.com/schlomo/1ee15695cc04a6324db4f4919c7754ec you will find a complete demo that implements a simple Kubernetes pod with a WebDAV server that uses git as a backup tool (bad idea, good for demo) to persist the storage volume in a GitHub repo, demo-data:

To simplify the SSH key handling I use ssh-url-with-ssh-key. To prevent abuse I disabled write access for the SSH key included in the demo files. If you run the demo as it is, you will get a WebDAV server with the content of the demo-data repo, but changes won't be stored in my GitHub repo. Simply create your own git repo with its own deploy key to see also the backup part of the demo.

The Kubernetes Deployment with the initContainer looks like this:

To try this out you can start minikube on your local desktop. Once it is up an running, simply apply both Kubernetes manifests like this:

$ kubectl apply \

-f https://gist.githubusercontent.com/schlomo/1ee15695cc04a6324db4f4919c7754ec/raw/deployment.yaml \

-f https://gist.githubusercontent.com/schlomo/1ee15695cc04a6324db4f4919c7754ec/raw/service.yaml

As a result you get the running service and deployment:

At the microxchg 2018 I gave a talk about this topic, view the slides or watch the video.

Comments

Post a Comment